By Alex Hentschel,

and Tarak Ben Youssef

An ‘element of chance’ is ubiquitous in our world – not only in natural processes. Often, we purposefully engineer randomness into machines and processes we build: games and raffles, visa lottery recipients, population samples for surveys, audits or safety screening, generating keys and other cryptographic parameters. Commonly, the value and potential security guarantees are closely coupled to using ‘high-quality randomness’. For example, you would probably find a board game much less appealing knowing that the chances of winning are heavily skewed in your favor. Similarly, selecting a smaller sub-sample of medications or parts produced by a factory for detailed secondary inspection is only effective, if each part has the same chance of being selected.

Most commonly, we rely on authorities and centralized parties to generate randomness, such as governments for tax audits, or sport organizations for tournament seeding, etc. However, we always require trust into the authority / centralized party for honesty. For example, an allegation became public that the pairing of teams for an European soccer competition was biased by cooling some balls to make their identification possible during the blind draw.

Desired Properties

In the web3 domain, we desire a decentralized approach with rigorous mathematical guarantees as opposed to weak honesty assumptions about centralized actors. Specifically, we assume that any individual party or small group of actors could be compromised, e.g. by hacks, financial incentives, regulatory pressure, narrow politico-economic interest, etc.

On a technical level, the goal is to engineer a distributed source for random numbers with the desired properties below.

- Tamper-proof: despite malicious actions of a colluding group of adversarial actors (including miners!) the randomness should be :

- Unbiasable, i.e. the distribution of the produced random values remains unchanged

- Reliable, i.e. the protocol continues to produce random values

- Unpredictable: no party can predict the random values till they are produced by the protocol. In the context of on-chain randomness, we desire that the randoms used by a transaction cannot be predicted before the transaction is committed in a published block. Note that unpredictability implies uniformity of the random outputs, where each value within the output domain is produced with equal probability and it is not possible to predict what values are more likely to be drawn.

- Verifiable: it must be possible for other nodes (and external clients) to verify that the random values were honestly generated.

- Efficient: in terms of communication and computation cost as well as latency

As we will explain below, we cannot rely on the honesty of block proposers, aka mining nodes, as this would undermine the core requirement of Byzantine Fault Tolerance [BFT].

Challenges with using block hashes (or other on-chain data)

Block hashes can be biased easily by the node proposing the block, because the proposer generally has some influence and knowledge of what they put into the block. Lets walk through a potential attack scenario targeting a hypothetical web-3 casino.

- We assume a single malicious consensus node, called Evil Edward. Edward can locally construct 1000 different variants of a block, e.g. by just varying what transactions it includes and their order. Typically, the combinatorial degree of freedom is very large. For example, if there are 10 transactions in his mempool, Edward can choose for each whether to include it in his proposal or not. This alone results in 210 possible blocks Edward could construct.

- Given a candidate block, constructing the random seed via hashing (and /or including other on-chain information) is fully deterministic, and the algorithm is public. Meaning, Edward can locally calculate the random seed for each transaction.

- This approach is similar in nature to hash-grinding or proof of work, in that Edward is varying the content of the block with the goal to find one, whose hash has some specific desired properties.

- Depending on the random distribution the smart contract samples from that Edward is targeting, his success probability varies depending on how much computation Edward can expend. For example, if the transaction is simulating a simple coin flip (such as Edward betting on roulette black or red, where either win with probability ~50%), Edwards chances to win are 50% when he constructs n=1 blocks or (1-0.5n) in general. In case the targeted smart contract draws multiple random numbers (e.g. poker) that need to satisfy some conditions, Edward might have to generate a larger number of blocks to win. Nevertheless, Edward’s ability to bias the random draw is only limited by his computational resources and can be arbitrarily close to 1.

- For a web-3 casino, margins are typically razor thin. The casino loses with probability 49% and wins with 51%. It might be that somebody walks out of the casino with a million dollars. But that is an outlier. Averaged over many games and many people, the casino will statistically earn more money than it loses … as long as its source of randomness is unbiased.

- Now Edward walks into the casino. He has enough money to play many games. He mainly submits gambling transactions shortly before it is his turn to propose a block. Internally, Edward builds many blocks but publishes only one block that seeds the random number generator for his transaction favorably. Edward needs to bias the probability of him winning only slightly, just enough so the odds of winning are higher for him than the Casino. He will slowly but surely drain the casino of all funds.

Conceptually, this is a ‘grinding attack’. Even if Edward’s success probability to post-select a favorable randomness is relatively small, it can still make a materially large difference. But worse, Edward’s ability to bias the random draw is only limited by his computational resources and can be arbitrarily close to 1.

This example illustrates an important insight: Any data object that a single node constructs and where the node has any influence on the object’s byte representation is not a secure source of randomness. The creator can always construct multiple variants internally and only release favorable ones.

Insecure source of randomness is a widespread vulnerability in many blockchains, including Ethereum, which has caused enormous financial losses estimated to be over $30 million. In large, we attribute this to a lack of secure primitives for smart contract developers to use. Instead, the challenge of secure randomness is commonly outsourced to smart contract developers, each team having to research and implement their own solution.

Flow chose to solve the secure random number generation challenge on a platform level. This frees the developers to focus on higher-level work, catalyzes creativity, and reduces cost, which in turn benefits users and fosters innovation.

Flow’s VRF-based Random Beacon

Flow natively implements a Distributed Random Beacon [DRB] protocol, which is collaboratively executed by Flow’s consensus participants and generates an unbiasable, unpredictable and verifiable source of randomness in a fully decentralized manner. As the following figure illustrates, Flow’s source of randomness is produced for every block. The source of a block is not part of the block itself, it is a signature over the block and is included in the child block.

So if Edward is constructing block B, he has to publish it first. Meaning at the time of block construction, the source of randomness is entirely unknown.

Flow’s threshold-VRF-based beacon:

Conceptually, Flow’s DRB utilizes a Verifiable random function [VRF], and more precisely a threshold-VRF version. The VRF chosen is a deterministic and unique signature scheme, namely BLS signature. In addition, BLS offers an elegant non-interactive threshold signature scheme. A (t, n)-threshold signature scheme enables (t+1) parties among n to collaboratively generate an aggregated signature over a message m (the parent block hash in Flow’s case), using a secret key skG in a distributed manner. skG remains completely unknown to the parties after multiple signatures are generated as long as (n-t) do not collude. The corresponding public key pkG is publicly known and allows any party to verify the signatures, i.e the VRF outputs.

For an optimal tradeoff between reliability and unbiasability, Flow chose t to be about the half of n. At the time of writing, we count approximately n=121 consensus participants in the network and we require 61 votes to reconstruct a threshold signature. On the happy path with all consensus participants voting, there are many ways to choose t+1 out of all the received votes. The inherent properties of the BLS threshold-signature scheme guarantee that the output signature is the same no matter which votes are chosen, and no other distinct signature succeeds the VRF verification. These conditions are important to make sure the block proposer cannot iterate through possible random outputs, and that the protocol produces only one valid random per round.

Flow’s beacon setup:

The output signature is deterministically derived from sk_G and m, but it “looks” random as long as sk_G remains unknown. The output entropy is therefore derived from sk_G 's entropy. This group private key is implicitly generated during a dedicated setup phase. The n nodes collaboratively run a BLS-Distributed Key Generation [DKG] protocol. Flow protocol currently implements joint-verifiable secret sharing [VSS] protocols known as joint-Feldman. The protocol collects entropy from all participant consensus nodes to implicitly generate sk_G and share it among the nodes (each node i gets a share ski). The setup process also results in deriving the corresponding public parts pk_i and pk_G. Flow collects and shares all the public data in a smart contract which allows public verification of the beacon randomness output. A DKG needs to run when the set of consensus participants changes. The Flow protocol proceeds in epochs, each spanning 1 week. After the Staking Phase concludes, the consensus committee for the upcoming epoch runs the DKG as part of the Epoch Setup Phase. The resulting keys are used to generate randomness for each block of the epoch.

Given the message being signed is the parent block hash, the consensus node constructing the block can’t predict the source of randomness for its own block. It has to commit and publish the block first. Furthermore, the node constructing the child block (C in our example) containing the source of randomness for the parent (B in our example) also can’t influence the source of randomness, because there is only one valid VRF output (i.e the BLS threshold signature) which is deterministically derived from sk_G and block B.

For further technical details, we invite interested readers to take a look at Flow’s second white paper (section 2.3) and Dfinity’s paper, which inspired Flow’s Random Beacon.

Performance of Flow’s Random Beacon

For performance reasons, we implemented the random beacon natively within the flow protocol. The DKG runs automatically to prepare for the next epoch, consensus nodes distribute their threshold signature shares as part of their votes and proposers reconstruct the threshold signature as part of building blocks. Thereby, secure randomness is available for each block within a single consensus round (about 1 second on mainnet, and 300ms proven to be attainable in the future solely via code optimizations).

In comparison, most blockchains offer insecure per-block random number generation natively within the protocol, which are biasable by miners. Developers on other chains commonly have to choose between using insecure random numbers available with similar latency as Flow’s secure random numbers, designing their own on-chain solutions with weak security guarantees, or fall back to off-chain random oracles, such as Chainlink VRF or drand. While the latter provide similar robust guarantees as Flow’s native random beacon (as they are also based on a threshold-VRF), their latency is worse by a factor of 10x to 100x. Furthermore, off-chain solutions are much more cumbersome for a developer to integrate. While a discussion of Ethereum’s Randao is beyond the scope of the present article, we emphasize that there are latency and security challenges with using Randao and refer interested readers to Ben Edgington’s book for further details. Other common approaches in the blockchain space are protocols based Commit-Reveal, Verifiable Delay Functions [VDF], other VRFs, or a combination thereof. To the best of our knowledge, most of these alternatives either suffer heavy latency and/or biasing vulnerabilities by malicious miners. Moreover, many of these protocols produce a fresh random value per epoch rather than per block as in the Flow beacon. For a broad review, we refer the interested reader to this article and this paper from a16z.

The Flow blockchain has absorbed the complexity of secure random number generation into the platform, so developer don’t have to worry about it. To the best of our knowledge, there are only 2 blockchains that have a secure source of randomness based on a threshold-VRF natively implemented within the protocol: Dfinity (which pioneered the use of threshold signatures for random number generation) and Flow (taking inspiration from Dfinity’s proposal).

Remaining probability for consensus nodes to bias the random beacon is very negligible in practise

When discussing figure 2 earlier, you might have already wondered: Is there still some residual possibility for colluding consensus participants to bias the random beacon? Theoretically, there is but we’ll see that it is nearly impossible to exploit in practice.

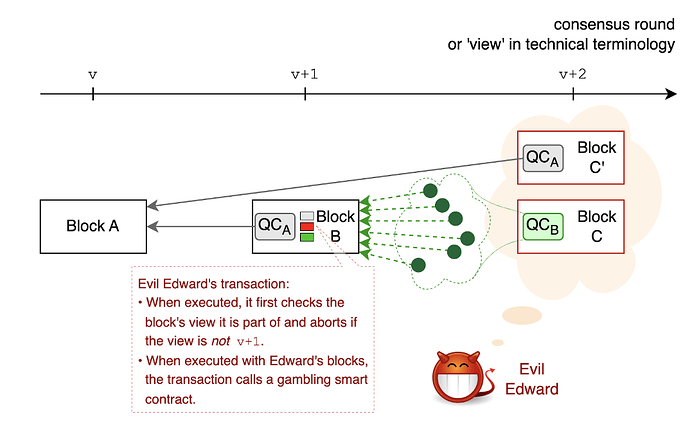

Let us assume that Evil Edward is selected to be the block proposer for consensus round v+2. As the leaders are known upfront, Edward can submit his gambling transactions ahead of time hoping that the proposer prior to him will include them into their block C. Before doing anything else, Edward’s transactions check whether they are included in a block proposed in consensus round v+1 and abort otherwise. Thereby, Edward can submit many transactions, which only do something if they were in fact included in block B. Note that once Edward’s transaction is included in block B, the random numbers to be used by the transaction are implicitly committed to, without being predictable by Edward or any other party.

Being the designated proposer for round v+2 starts, Edward receives the votes for block B from the prior round v+1, which Edward internally aggregates to Quorum Certificate for block B [QCB]. At this point, Edward has knowledge of the source of randomness for block B and has two choices:

- He can construct and release block C as prescribed by the protocol.

- Or he discards the QCB (including the source of randomness for block B contained therein) and pretends he didn’t get enough votes for block B. The protocol will time out, allowing Edward to orphan block B and to construct a child for A. (For readers interested in the details of the consensus timeout mechanics, we recommend our recent article Jolteon: Advancing Flow’s consensus algorithm.)

As we discuss below, there is still a theoretical possibility for a malicious proposer to bias the random beacon by orphaning blocks they don’t like. We emphasize that “biasing the random beacon” does not mean that a malicious proposer is able to choose one value over another, which is the case for example in Ethereum’s Randao. In Flow, the extent to which a proposer can bias the DRB is either accepting the randomness that they generated or falling back to another unknown outcome to be generated by a different node in the future.

- When using the block hash as entropy, a malicious node can easily construct thousands or millions of candidates and publish the one it likes best. In contrast, with a threshold signature implemented within the consensus protocol, an attacker only has one shot: they build on top of the block or orphan it. This reduces the success probability for an attacker by several orders of magnitude and illustrates the importance of unique threshold signatures for unbiasable randomness.

- In Flow, nodes maximize their reward when following the happy path protocol. Specifically block proposers are financially incentivised to avoid falling back on timeouts. In other words, there is a financial cost for nodes to collude with Edward and help him to orphan block proposals.

- Further stronger mitigations are straightforward to implement. In particular, Edward’s biasing attempts are only useful if he is the only node collecting votes for block B. But that is merely a convention which is easily changed. So instead of sending the votes only to the immediately next proposer (k=1), consensus participants could send their votes to the following k proposer. The probability for all k proposers to be colluding with Edward rapidly approaches zero already for reasonably small k (e.g. k=8 to k=20). Honest nodes that also constructed QCB would broadcast it and the timeout protocol would ensure that Edward is forced to build on top of block B. We emphasize that this biasing mitigation still only requires linear message complexity of the consensus protocol.

As a formal write up of our argument above is still pending, we word our conclusion as a well-founded conjecture: Edward’s success probability for biasing the DRB is nearly impossible in practice.